4 hacks you can exploit to fool real world AI

What do your dream company, Tesla, the London Met police and Facebook and Microsoft have in common?

They all rely on AI.

And as I mentioned in my previous post, AI is not always super smart and you can take advantage of that. Just follow the guide (disclaimer: I am not encouraging any illegal behaviour).

#1 Looking for a job? Adapt to your audience.

A number of AI programs for CV screening look for keywords in the text of your resume.

In order to pass them, you can exploit the discrepancy between what the AI sees and what the human eye perceives. By writing keywords such as “Harvard”, “MIT” and “Cambridge first class honours” in the background color of your resume, you will likely pass the AI screening without impacting your chances of passing the human screening even if you tell the recruiter you actually got a degree from UCL. Now you let me know if you think lying to an AI is unethical.

#2 Teslas are safe…except from evasion attacks

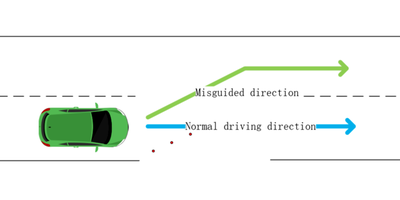

Tesla’s autonomous driving AI, like most computer vision models, uses deep neural networks, a kind of machine learning model which aims to mimic the visual cortex to process images. However, there are significant differences between the human brain and these artificial neural networks which are hard to control. Researchers from Tencent security lab have shown that such models are vulnerable to evasion attacks, whereby a slightly modified version of an image can mislead a model. In 2019 researchers managed to make a Tesla interpret 3 stickers on a road as a curved line and take the reverse lane as a result, a move which would not have fooled any human.

Even if you are lucky enough to own a Tesla, think twice before sleeping while driving…

If you want to fool an AI in a similar fashion, you might want to try out face camouflage in areas using cameras connected to face recognition software (it’s not just in China, also in London).

#3 Facebook censorship is vain…if you invent your language

Being part of a history memes group on Facebook, last year I saw the Darty logo popping up very often in my feed. It turns out that Nazi flags are censored by Facebook so meme makers resorted to using that logo which is very similar to represent the Third Reich. All insiders knew that the Darty logo was synonymous with the Nazi flag but Facebook’s censoring algorithm was oblivious of that.

When AI censors you, invent your own code language it does not understand.

#4 Microsoft Twitter bot, famous victim of poisoning attacks

Machine learning models learn from data and sometimes that data is open source, meaning you can decide what the model will learn. Microsoft realised that in 2016 when they released Tay, a chatbot to interact with users as the chatbot was data poisoned. Malicious users started using hateful speech which quickly corrupted the bot, turning it into the first openly racist and misogynistic AI. Just like one of his thought leaders, Tay’s Twitter account was soon taken down.

As big tech companies are exploiting online data about you to provide targeted ads, you can use a similar technique to impact their effectiveness. Telling Facebook that you are from Honolulu and live in Sydney like me (and faking your location) may increase the number of surfboard ads you receive and reduce the amount you spend because of more accurate ads.

Thank you so much for reading this. Let me know about other AI hacks you know about, I love hearing these stories and I am sure they will save us when the world is invaded by killer robots.