Generative models and bias

You have probably heard of synthetic art or fake speeches of Obama (deepfakes) created by AI.

Both are created by generative models, a kind of artificial neural network that can generate complex data such as images, text or sound, all thanks to the Generative Adversarial Network (GAN) framework. This concept of GAN introduced by Ian Goodfellow in 2014 is undoubtedly the invention of the past decade that will have the most impact on our lives.

Once trained on a real dataset, these models can create synthetic data which looks real from just a random number.

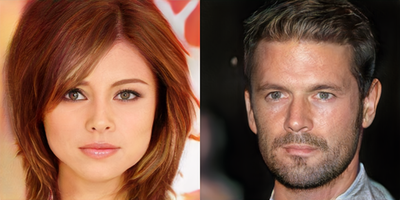

In 2018, Nvidia introduced StyleGANs, generative models which provide the ability to alter «genes», i.e. defining features of an image.

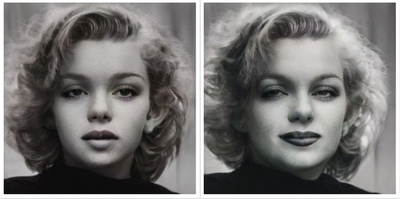

For example, we can change the ethnicity of Marilyn Monroe by increase the value of the Asian gene or that of the African gene.

We can also tune the age or the yaw gene to make her younger or get the picture from a slightly different angle.

But there’s something wrong in each of these two synthetic images! Can you notice it?

Firstly, the second ear of the picture on the right does not seem to bear any earring while it is expected to have one since Marilyn’s other ear clearly has one on the original picture. While this mistake is hard to interpret, it shows that most machine learning models still lack awareness of some relationships that are common knowledge for humans.

More interestingly, the visible earring is disappearing in the young picture even though we did not tweak the earring gene. This is because our model was trained on a dataset where very few children had earrings. Therefore, the model cannot properly isolate the age and the earring genes. It may not seem like an issue in that case but we can clearly identify a more significant bias: when we increase only the value of the earring gene, the person’s traits age.

This problem is known as feature entanglement. It is a major issue in StyleGANs caused by the imperfect distribution of the training with respect to all the features. As a result, models learn biased representations of reality and which can have serious consequences when deployed on the real world applications.

By experimenting on artbreeder.com I came across a number of other biases, namely:

- Sad people look Indian.

- People are younger when they wear a hat.

- Middle Eastern people look more like a man than a woman.

All likely because most of the sad looking people in the training dataset looked Indian, most people with a hat were young and most middle eastern people were men.

If have a hacker mindset, I encourage you to visit that website and experiment, see if you can find other biases. Thanks for reading until the end, it makes me happy!

PS: Don’t hesitate to ask me questions about generative models, whether technical or philosophical.